Deepfakes are videos or images that often feature people who have been digitally altered, whether it be their voice, face or body, so that they appear to be “saying” something else or are someone else entirely.

Typically, deepfakes are used to purposefully spread false information or they may have a malicious intent behind their use. They can be designed to harass, intimidate, demean and undermine people. Deepfakes can also create misinformation and confusion about important issues.

Further, deepfake technology can fuel other unethical actions like creating revenge porn, where women are disproportionately harmed.

Dr. Paromita Pain, an assistant professor of global media in the Reynolds School of Journalism, explains how one can identify deepfakes.

How can someone detect deepfakes?

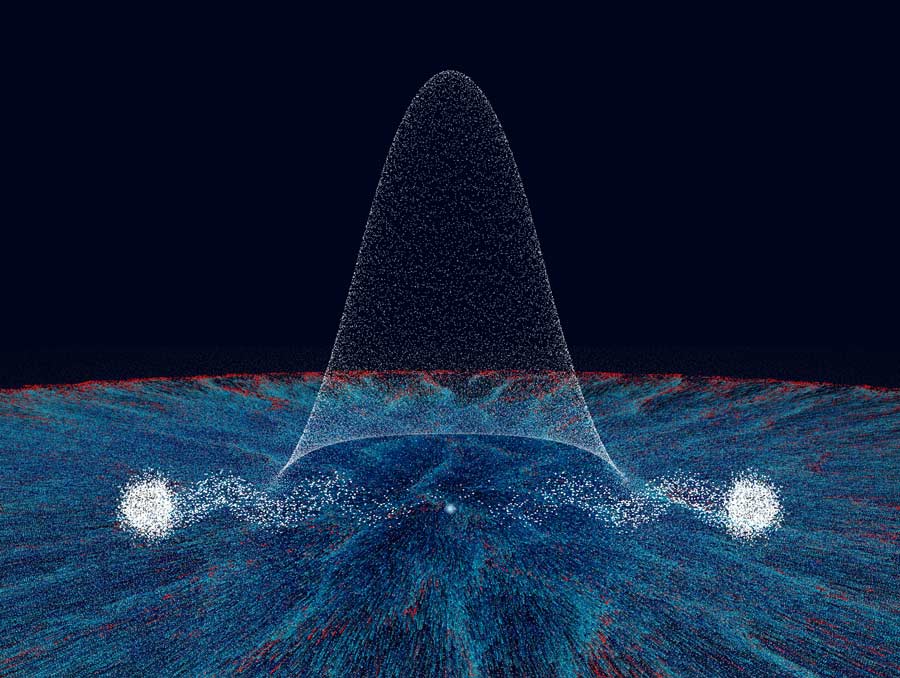

Detecting deepfakes is getting more difficult as the technology that creates deepfakes is getting more sophisticated. In 2018, researchers in the United States demonstrated that deepfake faces didn’t blink like humans do, which was considered a great way to detect if images and videos were fake or not.

However, as soon as the study was published, deepfake creators started fixing this, making it even more difficult to detect deepfakes. Oftentimes, the research that is designed to help detect deepfakes just ends up helping make deepfake technology better.

But not all deepfakes are products of sophisticated technology. Poor-quality material is usually easier to detect, as the lip syncs may not match well or the skin tone may seem odd. Additionally, details like hair strands are often harder for deepfake technicians to create. Studies have also shown that jewelry, teeth and skin that create erratic reflections can also reveal deepfakes.

Generally, algorithms today are very capable of rendering frontal face profiles better than side profiles because side profiles are harder to emulate according to ZDNet, a technology magazine.

As deepfake technology becomes more complex and harder to detect, more resources are becoming available specifically to help individuals detect deepfakes on their own while scrolling through social media and/or other online forums.

For example, the Massachusetts Institute of Technology (MIT) created a Detect Fakes website to help people identify deepfakes by focusing on small details.

MIT highlights several “artifacts” that can prove something is a deepfake. This includes paying close attention to specific attributes like facial transformations, glares, blinking, lip movements, natural sounds like coughs or sneezes and other characteristics like beauty marks and facial hair.

Fact checking definitely extends to deepfakes and the same rules of vetting and verifying information apply. If what you hear or see sounds too good to be true, it probably is.

Cross checking content with other reliable sources is necessary not only to understand the information better, but also to find out if the information is even real.

What should someone do if they see a deepfake?

If you find a deepfake, immediately refrain from sharing it. You may think that you can share with friends as an example of what a deepfake looks like, but once you share something remember that it often takes on a life of its own.

Additionally, if you find yourself in a deepfake, immediately contact a lawyer with experience of media and related laws. Even if a photo or video is seemingly made for “fun,” it is still illegal if it has been made without your knowledge or permission.