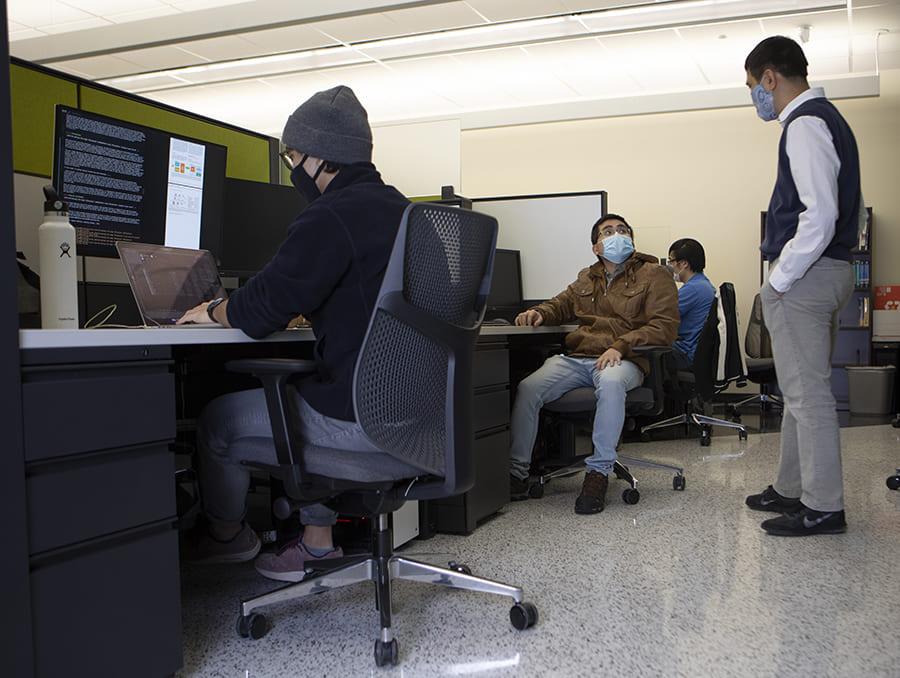

Tin Nguyen and his Ph.D. students Duc Tran, Hung Nguyen and Bang Tran have used the data processing power of machine learning to develop a novel tool to support the research of life scientists. Named scDHA (single-cell Decomposition using Hierarchical Autoencoder), the tool uses machine learning to address a key problem life scientists run into during their research: too much data to process. With the results of their efforts to solve this problem recently published in Nature Communications, “Fast and precise single-cell data analysis using a hierarchical autoencoder,” Nguyen’s team is now looking to serve fellow researchers by using the tool to support their analysis of large quantities of cell data.

Nguyen was kind enough to participate in a Q&A about the tool to illustrate how it works and describe some of its capabilities.

What problem does the tool help life scientists (biologists and medical doctors) overcome?

Biotechnologies has advanced to a degree where scientists can measure the gene expression of individual cells in our body. This technology is called single-cell sequencing. One experiment can generate the expression of millions of cells and tens of thousands of genes (can be represented as a matrix with millions of rows/cells and tens of thousands of columns/genes). It is hard to analyze such data. Adding to the challenge is the dropout events, in which many genes and cells cannot be measured due to the low amount of biological material available for the cells. It is extremely difficult to mine such data to gain biological knowledge.

How does the tool—scDHA (single-cell Decomposition using Hierarchical Autoencoder)—work?

To mine the data from the noisy and large data, we used multiple state-of-the-art techniques in machine learning. First, we developed a novel non-negative kernel autoencoder (neural networks) to eliminate genes that do not play important roles in differentiating the cells. Second, we developed a stacked Bayessian autoencoder (neural networks) to transform the data of tens of thousands of dimensions to a space with only 15 dimensions. Finally, we developed four different techniques to: (1) visualize the transcriptome landscape of millions of cells, (2) group them into different cell types, (3) infer the developmental stages of each cell, and (4) build a classifier to accurately classify the cell of new data.

The tool has four different applications. Can you explain what it can do?

Visualization: The first step in the analysis pipeline for most life scientists. The data is transformed from high dimensional space into a 2D landscape, which is often called Transcriptome Landscape, so life scientists can observe the landscape of the cells, and the relative distance between them.

Cell segregation: The goal to separate the cells into groups that are likely to have the same bodily functions with similar biological features. This is particularly important in constructing cell atlas for tissues in different organisms.

Time-trajectory inference: The goal of this is to infer the developmental trajectory of the cells in the experiment. We will arrange cells in an order that presents the development process of the cells over time (time-trajectory). Biologists can use this trajectory to investigate the mechanism of how cells can develop to different cell types.

Cell classification: This is particularly important to reuse the data we already collected to study the new data. Given well-studied datasets with validated cell types and well-understood mechanisms, we build a classifier that can accurately classify the cells of new datasets.

What doors to new knowledge (or techniques or technologies) do you foresee the tool opening?

Visualization: Important in exploring the data, especially when analyzing tissues that have not been studied before.

Cell segregation: Important in studying new tissues. This allows scientists to group cells according to their functions.

Time-trajectory inference: This is important to understand how cells divide and develop over time.

Cell classification: Allows us to classify cells of new datasets.

Currently we only understand something about human tissues at the cell resolution. Single-cell technologies open up a whole new world for us to understand the composition of tissues, how they develop and interact. Without tools such as ours, it is impossible for life scientists to mine information from such large and high-dimensional data. The same can be said for thousands of model and non-model species.

What is next for the tool?

Now, we wish to connect to life scientists that want their single-cell data analyzed at UNR.

Single-cell technology is a relatively new and expensive. We developed and validated this tool using data that were made available by the Broad Institute, Wellcome Sanger Institute, and NIH Gene Expression Omnibus. Now, we wish to connect to life scientists that want their single-cell data analyzed at UNR.

Can the tool be applied in other arenas?

Even though we developed this platform for single-cell data analysis, we wish to apply this technology to other research areas too, including subtyping cancer patients (clustering), patient classification, functional analysis (pathway analysis), etc. Our research lab has published high-level journal articles in the above-mentioned research areas.

Calling all collaborators

The Department of Computer Science and Engineering has the expertise to support research across campus. Those hoping to work with Nguyen and his team are encouraged to email Professor Nguyen to discuss the details of their project.