NevadaToday

Mind Blown How the University of Nevada, Reno researchers are rethinking the future of neuroscience

Mind Blown

Prologue

Humans are smart – and have been for some time. Recent findings suggest that agriculture started in a primitive form up to 30,000 years ago. There's evidence of successful brain surgery being done in France over 8,000 years ago. Eratosthenes, the chief librarian for the great library of Alexandria, used the shadows cast by trees in different locations to calculate the circumference of the Earth more than two thousand years before the first object was sent into space.

Today, we're wirelessly connected to the largest information database in history via pocket rectangles, and millions of people around the world are right now utilizing metal boxes powered by explosions just to get to work.

It's not hard to look back across history, take stock of human achievement and feel a special kind of intelligent. Nature bestowed upon homo sapiens sapiens a high-octane, calorie-guzzling super brain, and we've used it to forge metal, cure disease, explore the heavens and Earth, split atoms, map genomes and much, much more.

Humans are also spectacular at getting things wrong

Around the same time Eratosthenes was measuring tree shadows, his Greek compatriots still believed the brain existed solely to cool the blood. Even Plato believed sight was only possible because the human eye emitted a fire lit by Aphrodite herself. For the Greeks, religion obviously played heavily into what we would think of as early science, but scientific dogma still runs afoul of progress today.

Historically, challenging dogma has gotten scientists and scholars into a lot of trouble. Take the classic example of the Catholic Church’s persecution of Galileo. It took them 350 years to apologize and admit he was right about the Earth not being the center of universe. Scientific findings are better received these days, and the scientific method itself is more rigorously employed – so why is challenging dogma still such an uphill battle?

The replication crisis and the half-life of facts

Many results from scientific studies are being taken as true and not independently verified

The first hurdle is what’s known as the replication crisis in science. It’s especially problematic in psychology and neuroscience. Flashy studies make money, headlines and careers. Repeating them rarely does. First, people want to read about big science news: new discoveries and mind-blowing facts. Replication studies, whether they find the same results or not, just don’t make news all that often. Second, there’s a lot to be learned in neuroscience, and replication just isn’t a top priority in the field. Doing pioneering research is a lot more enticing than checking someone else’s work.

Knowledge evolves and changes over time, and some things held as facts are found to be incorrect

Hurdle number two is sometimes called the half-life of facts. Many beginning medical school students are told that, upon their graduation, half of what they will have learned won’t be true. The tricky part is finding out which half. This is one of the most important things about science: it’s built on a model that demands its practitioners question ideas, try to undermine long-held beliefs and seek truth above all else.

This is exactly what professors at the University of Nevada, Reno are doing, and their work is blowing the minds of the neuroscience community.

Down for the count

The number of neurons in the human brain is often compared to the number of stars in the night sky. That isn’t terrifically helpful for the vast majority of people, who haven’t spent much time counting stars, but it does convey the magnitude of the number. The human brain contains an estimated one hundred billion neurons.

For anyone who likes to see all of those zeroes lined up, that’s:

100,000,000,000

Professor Christopher von Bartheld came to the University 20 years ago with a particular interest in the quantification of brain cells. “Why are we interested in this? Well, we want to know what’s in our brains: how many neurons, how many glial cells. Some of them die off in certain diseases,” said von Bartheld. Unlike neurons, glial cells do not carry nerve impulses but rather serve to protect and nourish neuronal cells, among other functions. “It’s a pretty big thing, quantifying what’s in our brains.”

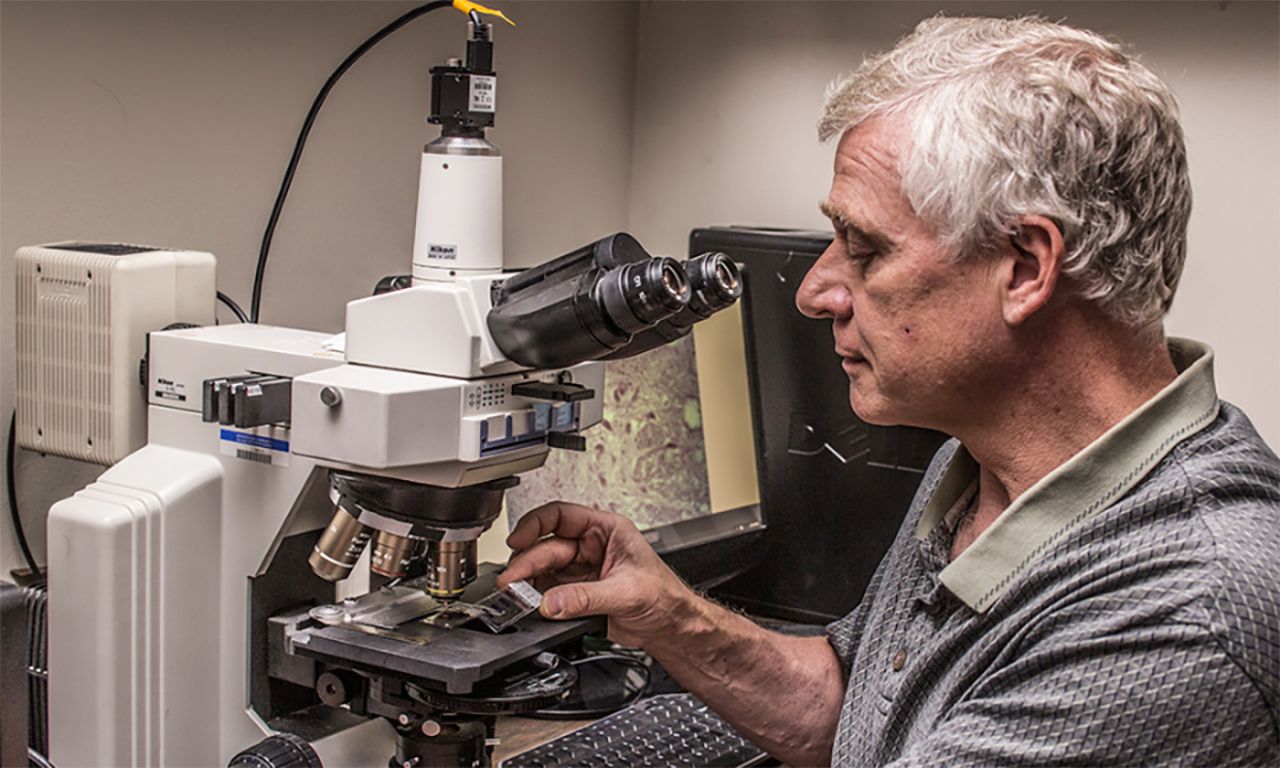

There are a few different methods for counting brain cells, but until 2005 the most accepted way was to take a thin slice of the brain, use a microscope and extrapolate the total number of cells from what was counted in that slice. There are a few flaws in that particular method, however, as von Bartheld would find.

First, just to make the cut that produces the slice, the brain tissue needs to be compressed slightly – like holding on to a loaf of bread to cut a piece. Because brain tissue squishes easily, this compression can cause up to a 20% difference in the cell count.

The second issue is that the human brain shrinks as we age, which means slices of the same volume could contain vastly different numbers of cells. The third major issue: ratios between neurons and glial cells aren't the same throughout the brain. These issues, when the small sample size is multiplied to get a total count for the brain, compounded to make counting errors more severe.

"It was kind of an embarrassment because advocates of the newer methods said, 'Oh, these are theoretically unbiased; we don't even need to verify that.' Well, here's a systematic bias in this method."

A method of liquefying the brain and using an antibody to stain neuronal cell nuclei, which are then counted

Fortunately, techniques for brain cell counting evolved, and in 2005 a brain soup method came out of a neuroscience research group in Brazil.

Brain soup: a new recipe for cell counting

Thanks to this new method, it was found there are fewer glial cells than neuronal cells in the brain. The issue, though, was that textbooks for the last fifty years have been telling neuroscientists there are ten times as many glia as there are neurons. “Where did this come from? Nobody could point to any studies that showed that there was a ten-to-one ratio,” von Bartheld said.

The brain soup method of cell counting found a one-to-one ratio of neurons to glia, 90% fewer glial cells than the number previously assumed and, more important, used to influence scientific research. Von Bartheld and his graduate students reran the brain soup tests, which had never before been validated, and rigorously verified the accuracy of the technique.

The ten-to-one ratio was used to explain all sorts of things, including humans’ superior intelligence when compared to the rest of the animal kingdom. With this thinking, when higher ratios were found in an animal, it could suggest higher cognitive abilities – but all of this was based on an erroneous assumption.

The trouble with conventional wisdom is that it doesn’t need to be cited when referenced in research. Much like no one would care about a reference for the claim that our solar system contains one star, this ten-to-one ratio went unchecked for decades, a casualty of the replication crisis. Von Bartheld and his group traced the idea all the way back to the 1960s. The researcher who’d published the ratio originally used samples from the brain stem, which has far more glial cells relative to neurons than the rest of the brain.

"Nobel prize winners and the elite of neuroscience, in their textbooks they got it wrong, and some even thought there were up to five trillion glial cells – and that was the most respected textbook of neuroscience for decades."

Von Bartheld and his group prepared a review of neuroscience texts that showed the history of the incorrect ratio, but getting it through peer review wasn’t easy. They were initially met with resistance, told that the paper was “too historical.” There was some suspicion that journals didn’t want to besmirch the names of venerated researchers who’d used the incorrect ratio in their work without checking it, many of whom are still alive.

With persistence and some adjustments, the paper was accepted for publication in 2017. Time will tell how quickly this finding makes its way through the neuroscience community and out into textbooks, where it will stand as testament to the half-life of facts.

The eyes have it

Correlation isn’t necessarily causation – or that easy to see. For von Bartheld, it was curiosity, reading research outside of his usual purview after a new colleague piqued his interest, that opened his eyes.

Von Bartheld and his group had been studying “lazy eye,” an unfortunately common condition in children in which one eye deviates inward (cross-eyed) or outward (wall-eyed).

Misalignment of the eyes, either inward or outward

These conditions are collectively referred to as strabismus exotropia and esotropia, wall- and cross-eyed, respectively. There wasn’t much research being done on how the muscles around the eye responsible for exotropia become dysfunctional or whether it is due to the neurons that control them. It’s estimated that almost four percent of the U.S. population develops some sort of strabismus.

“There are a lot of people that have misalignment of the visual axis,” said von Bartheld. “My initial thought was: can we strengthen these eye muscles or their innervation with growth factors? Maybe we can help kids that have this ‘lazy eye’ thing?”

To supply with nerves and stimulate

He and his group started researching the specific growth factors related to exotropia and how they might be able to adjust them to prevent the condition, but it was slow going at first. Exotropia can be caused by a muscle or tendon on one side of the eye being too long, which means it can be fixed surgically, as well as through the use of special eyewear.

“Binocular vision, the ability to use both eyes to see three dimensionally, develops in the visual cortex during the first few years of life. In order to have binocular vision, you have to have both eyes seeing the same thing during this critical period,” said von Bartheld. “Just forcing a kid to alternate between the eyes by patching the dominant eye doesn’t really help with 3D vision, so they really should be fixed with surgery.”

The surgery involves shortening the too-long muscle and/or tendon by removing a small segment, which used to be thrown away. Von Bartheld had to convince surgeons to start saving these pieces and sending them his way, and that took some time. Over the last decade and a half, his group has managed to collect “180 or so” samples this way. The control group for the tests and experiments? Organ donors.

The study of proteins and their functions

They started looking at gene expression and proteomics in the samples and were disappointed to find no obvious answers coming from the data. What they did find were some imbalanced growth factors. Growth factors are basically molecular signals used to influence the way a cell changes and matures. They include hormones, which control reproduction, development, behavior and more, and cytokines, which are small proteins that regulate cell growth and differentiation, as well as immune response.

One of the best things about being a researcher at a university is being able to connect with researchers in all sorts of fields. Sometimes these connections lead to serendipity. While von Bartheld and his team were collecting data from their samples, a new professor was hired to chair the psychiatry department. A prominent schizophrenia researcher, he had just published a meta-analysis looking at the cytokines related to the disease – and there was a match.

Defusing schizophrenia’s twenty-year time bomb

Measurable substances in an organism which indicate some phenomenon, including disease or infection

The vast majority of the time, children do not have schizophrenia. That is to say, the people who later develop the disease very rarely show signs before adulthood. The disease usually kicks in between the ages of 16 and 30 and usually later in the lives of women than in men. Many of the imbalanced genes in dysfunctional (strabismic) eye muscles have been identified as biomarkers for schizophrenia. This got von Bartheld thinking.

He starting digging into the topic and found in the last ten years there were five different studies done that looked at schizophrenia and strabismus. The findings were clear there is a correlation between exotropic strabismus and schizophrenia. The two muscles that control eye movement are called the medial and lateral rectus muscles, and they pull the eye inward and outward, respectively. The medial rectus muscle is the culprit when it comes to exotropia because its inability to properly pull the eye inward allows the lateral rectus to pull it the other way.

The two muscles come from different tissues in embryonic development and are innervated by different parts of the brain. The medial rectus muscle is innervated from the midbrain. “The idea was: maybe that’s why you have the correlation only with one of them, not the other,” von Bartheld said.

The studies on the link between strabismus and schizophrenia all agreed that exotropic strabismus means a stronger likelihood of schizophrenia. All also suggested that kids with this condition should be checked on as early as possible. As with any disease, the earlier treatment is started, the better.

Von Bartheld saw two possibilities: correlation and causation.

- Correlation: The imbalance in the signaling molecules leads to both the abnormal brain development and the eye muscle dysfunction because they happen to utilize the same signaling molecules.

- Implication: Children with exotropia could be tested for this imbalance and diagnosed much sooner.

- Causation: The abnormal vision due to the imbalance or growth factors and resulting strabismus somehow causes or contributes to the abnormal brain development. There is a causal relationship between the two.

- Implication: Fixing the strabismus early enough may prevent schizophrenia.

He has his hunch. For one thing, there are no blind schizophrenics, nor have there been.

"Nobel prize winners and the elite of neuroscience, in their textbooks they got it wrong, and some even thought there were up to five trillion glial cells – and that was the most respected textbook of neuroscience for decades."

Rates of schizophrenia are dramatically lower in populations with perfect vision, too.

“If you’re an infant and you have this double vision because one eye goes this way and one goes that way, maybe you get kind of confused about what’s real and what’s not real,” said von Bartheld. “I can’t tell you why the problems with reality would appear when you’re 20 years older – or more like 30 years older for women.”

At first, the time gap between fixing the strabismus and the onset of schizophrenia left von Bartheld feeling crestfallen. “I would be long dead if we did a prospective study starting now. But I realized that they’ve actually been doing this surgery since the 1980s.” At that point, ophthalmologists didn’t know about the connection between strabismus and schizophrenia, but they have been keeping track of the patients to make sure the surgery did in fact improve vision.

This means that records may exist, and von Bartheld is hopeful that digging through these records will be fruitful.

Either way, the research von Bartheld’s group is doing is beneficial, but if the causal relationship could be proven, untold millions of cases of schizophrenia would be preventable. In 2013, the overall economic burden of schizophrenia in the United States alone was $155.7 billion, two and a half times what it was in 2002.

This kind of interdisciplinary cross pollination of ideas is one of the hallmarks of the neuroscience program at the University. When things come together just right like this, it can be truly powerful.

Out of the picture

Assumptions can be dangerous. Very often, they go completely unnoticed, and that makes sense: it’s nearly impossible to get through a single day of reality without assuming a few things. No one would ever make it to work on time if every day started off without assuming the floor next to the bed exists or the knob in the shower still makes water come out when turned. In the same way that these go unnoticed every day, assumptions made in a scientific context may not become apparent for years.

The way humans perceive objects using the sense of touch

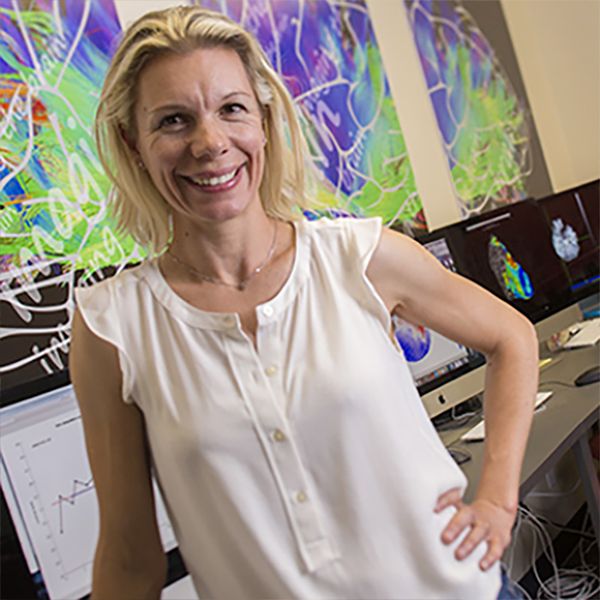

Associate Professor Jacqueline Snow started her career in neuroscience doing postdoctoral research on haptic perception. As she familiarized herself with the research already done in the field of perception, she noticed a recurring theme. “It became clear that as we move from studying haptic perception to visual perception, in which objects are perceived using the eyes, we switch from working with real objects to more impoverished representations of those objects,” Snow said. In this case, the impoverished representations are images, usually on a computer screen.

There are a number of perfectly good reasons this is the case. For one, it’s more convenient to store and present information in the form of computerized images. Images are easy to control and manipulate for experimental purposes, such as by changing their size, shape, color or timing. Labs also tend to already be full of equipment, computers, grad students and furniture. In the rare case that a box containing hammers, candlesticks, sunglasses, shoes, screwdrivers and other testable objects does find its way into a lab, these items are difficult to present in a scientifically rigorous fashion. Controlling presentation order and stimulus timing, for example, can present serious issues. There’s also the pesky fact that a functional magnetic resonance imaging (fMRI) machine is a giant magnet, so ferrous metals cannot be brought into the scanner, lest they become potentially life-threatening projectiles as they are drawn towards the magnet.

These and other pragmatic challenges associated with using real-world objects in neuroscience research led researchers to rely on images presented on computer screens rather than real objects – and this is where those assumptions come in. “It’s assumed in the literature that a two-dimensional image is an appropriate proxy for a real object in terms of understanding human perception and brain function, and our work suggests that may not be the case,” said Snow.

“Some of my research turns out to show that the brain responds very differently when people are looking at real objects compared to when they’re looking at images,” said Snow. “Using impoverished stimuli might give us a similarly impoverished understanding of how the brain functions.” This revelation takes the entire body of research done in this field and splits it into two categories:

- Image perception studies (i.e., most every study done up to this point)

- Real-world perception studies

Decades of studies now require a qualifier. It’s as if there were a scientist in Plato’s cave allegory who had dedicated her life to studying and meticulously documenting everything projected on the wall and understandably just called it ‘science’ – until it was revealed that actual reality was outside the whole time. Her work is perfectly valid, but now every study needs a caveat. Rather than ‘physics,’ it’s now ‘physics of the projections.’ She had been studying only a small part of the world and assumed it was all that existed.

A mind for pioneering

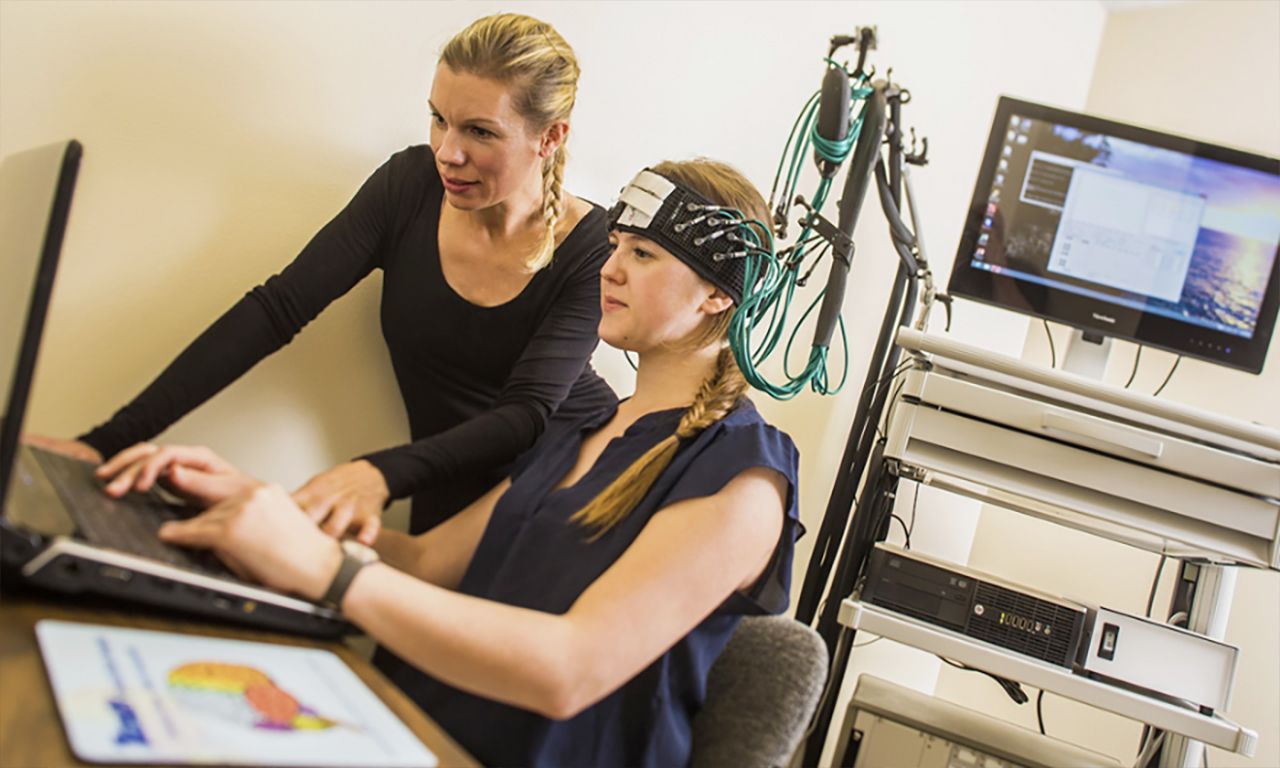

Snow and her team are right at the beginning of studying this new world of perception. “They each focus on different aspects of real-world cognition,” said Snow about the graduate students working with her. Their initial findings are more than a little promising.

“We find that real objects are more memorable than matched two-dimensional images of the same objects. We also find that real objects capture attention more so than 2-D images. Interestingly, real objects also capture attention more so than stereoscopic images of objects that look very similar in 3-D shape to their real-world counterparts,” said Snow. “Surprisingly, however, the unique effects that real objects have on attention disappear when the objects are moved out of reach or are presented behind a large transparent barrier that prevents access to the objects. Together, the findings indicate that the way we think about objects in our environment is influenced powerfully by whether or not the objects are graspable.”

The list goes on. Another of Snow’s students is studying how eye movement changes between two-dimensional images, three-dimensional image displays and real objects. “We always know we’re looking at a virtual object as opposed to the real thing, and it turns out that matters when it comes to eye movements,” said Snow. These studies look at how we divide our attention across individual objects and what part we pay attention to in real objects versus virtual ones.

Snow uses the example of looking at a virtual hammer on a screen and a real hammer that can be grasped. Previous work in Snow’s lab has shown that when looking at two-dimensional images of objects, such as tools, our attention is drawn automatically to the ‘business end’ of the tool (i.e., the head of a hammer) rather than its handle. “Eye movements are linked closely with attention. Because we typically know we’re looking at a representation as opposed to the real thing, this could matter when it comes to understanding how we make eye movements towards objects,” said Snow. These studies dig into how we look at and allocate our attention across individual objects and what part of the object is most relevant in real objects versus their representations.

One of the most interesting things Snow's group is investigating is how and why the brain responds differently to real objects versus images. One possibility is that when observing real objects, not only do visual-processing areas come online, but so too may brain areas involved in the physical acts of reaching and grasping, along with somatosensory areas, the areas responsible for the sense of touch. "For example, it could be that unlike image vision, we anticipate the motor and sensory consequences of interacting with tangible objects," said Snow. "Implications of this line of research include identifying sources of control signals in the brain that are suitable for neuroprosthetic implants, for example to help patients with paralysis to guide a robotic hand to grasp an object effectively. We want to tap into areas that are actually engaged during real-world vision."

Through the looking glass

“These are the first experiments of their kind that have been done anywhere in the world, so they’re revealing some important insights,” said Snow. As was mentioned above, it’s not surprising that these are the first of their kind. There are very real difficulties that Snow and her group had to overcome.

Presenting objects in a way that could be standardized across experiments, both in the lab and in the fMRI setting, required designing and building innovative stimulus display systems unlike those used in most other studies of visual perception. Then there’s coming up with a variety of graspable objects that don’t contain metal, like the very realistic-looking (but actually non-metallic) toy hammer, and making sure these objects can be easily and directly viewed by the test subject.

Normally, these kinds of tests would use a mirror to facilitate the subject’s ability to see the images presented while being scanned in the fMRI machine, but this adds the issue of potentially not being able to figure out where the object is and whether it is graspable. To get around this, Snow and her team have configured the fMRI machine at Renown Hospital so observers can be scanned with their head tilted forward, a bit like watching television in bed, while stimulus presentation and timing is controlled using custom-designed equipment including fMRI-compatible spectacles, LEDs and an infrared camera. “Set up for these kinds of experiments is complicated and can take hours,” said Snow. “We are fortunate to have the cooperation of Renown Health, especially their MRI technician Larry Messier, and support from the Center for Integrative Neuroscience.”

These factors, combined with the rarity of good test subjects, have led Snow all over the world seeking the "rare butterflies" (like twins with only one possessing a brain lesion in the proper area of the brain) and equipment she needs to complete her research.

"One thing that's really fundamental … is to develop this cutting-edge technology that enables us to bring the real world into more difficult testing environments," said Snow.

As with most fields of neuroscience research, there’s a lot still to be done and questions still to be answered.

Snow can rattle off dozens of potential research areas, from looking at robotics and virtual reality applications to studying the effect of display format on dietary choices and its potential relationship with rising rates of obesity, without even pausing to collect her thoughts.

“That’s one of the things I find most rewarding,” she said, “being able to work with talented students, and learning from others, to pursue new and fruitful avenues of investigation.”

The plastic brain

Daredevil is a Marvel comic book hero who was blinded by radioactive waste at a young age. As the story goes, his other senses were heightened to superhuman levels after the accident, allowing him to fight crime nocturnally in New York’s Hell’s Kitchen. His exploits have been immortalized in film, a popular Netflix series and myriad cartoons and comic books. Assistant Professor Fang Jiang has never heard of Daredevil.

Before getting into the reason this is notable, there are some misconceptions that need to be cleared up. First, humans use much more than 10 percent of the brain. In the same way that a bodybuilder wouldn’t use all of her strength to lift a cup of tea, using the entire brain for every task is just unnecessary. Parts of the brain specialize in doing different things. Whole sections are devoted to vision, for example, and they aren’t getting involved in wiggling toes or countering political points over Thanksgiving dinner, so for the simplest tasks, sure, maybe only a fraction of the brain is working on that particular thing, but the rest has other stuff to do.

Second, people are not born with a set number of brain cells that only declines with age and injury. There are a few places in the brain where new neurons are born on a daily basis – hundreds or even thousands of them – like the olfactory system, responsible for the sense of smell, or the hippocampus, which helps with the processing of emotions and spatial and episodic memories. The new cells seamlessly integrate themselves into the existing circuitry of the brain. This process is called neurogenesis and recent studies show it continues well into advanced age.

The growth and integration of new neurons

Not only are different areas of the brain doing different things while brain cells are replaced at different rates within them, areas that tend to specialize in one task or another can actually begin to shift what they're good at. This is called neuroplasticity, and it's where Daredevil and Assistant Professor Jiang come back in.

Areas of the brain shift and improve their specialized tasks

In her lab, Jiang and her team study the brains of the blind and deaf. "I'm not sure about superheroes," she said, "but it is true they are better than sighted people at certain tasks." Her blind subjects are notably better at sound localization and discriminating auditory motion (figuring out where a sound is coming from and how it's moving, respectively), and they have a lower threshold for tactile sensation, which means higher sensitivity, touch-wise.

Of particular interest to Jiang’s work with the blind is the visual cortex, the part of the brain dedicated to vision. The visual cortex is a major part of the brain where a lot of information is processed, and fortunately, this processing power does not go to waste simply because there’s a lack of visual data. “When somebody becomes blind, … the visual cortex becomes involved in other tasks, other modalities, such as tactile and auditory,” she said. “This is why people say, ‘when you lose one sense, your other senses get heightened.’ That’s at a behavioral level. We’re looking at neural responses.”

Jiang and her group are finding the visual cortex doesn’t simply start picking up new things to do at random, either. “These brain regions, … usually they keep their normal functions, so there are regions of the visual cortex that specialize in motion, for example, and then they get recruited when you perform auditory motion or tactile motion tasks,” Jiang said. “It gets used because it’s highly specialized for motion processing.”

The brain uses a different sense to compensate for the loss of another sense.

This is the source of those heightened senses. It’s called sensory substitution. “We see, but it’s not really our eyes that are seeing. It’s our brains interpreting what we’re seeing,” Jiang said. In the absence of visual data, auditory and tactile input are used to build a map of the outside world.

If the auditory system were a computer, this process is a bit like plugging in a high-end video card. It’s extra, highly specialized processing power being added to a system that, for sighted people, has to run on the basic model – their video cards being occupied with vision, in this case. Unfortunately for Daredevil, these changes are really only seen in people blind from birth or at a very, very early age. In the visual cortex, “plasticity is due to early development,” Jiang said. “Once it happens, it stays there.”

This is even true for those who are lucky enough to have their vision restored later in life. Jiang and her group have studied a few of these cases to see if the initial neuroplasticity could be reversed. “So far,” she said, “it looks like no.”

Ticking clocks and temporal windows

In the studies that Jiang and her group have done with the early deaf, she says it’s basically the same mechanism, just with different areas. There’s something to be said for the incessant ticking that accompanies some clocks, it turns out. For those who can hear it, it helps the brain keep track of time. “You usually get temporal information from the auditory system. When they become deaf, people show deficits in temporal processing,” Jiang said.

This part of her research is still being conducted, but preliminary findings suggest that deaf people distribute attention differently than those who can hear. Specifically, the information taken in by peripheral vision gets a lot more emphasis. Jiang and her group are running a variety of behavioral tests to track down just where these changes take place, and they’re finding interesting results. They recently reported that exposure to a delay between a self-generated motor action (like a keypress) and its sensory feedback (like a white circle appearing on the computer screen) induced different effects in the two groups of participants, with the hearing control group showing a recalibration effect for central stimuli only and deaf individuals for peripheral visual stimuli only.

Jiang and her group are also finding interesting results with facial recognition tasks. “Deaf people … are usually better at emotion processing,” Jiang said. “They show better performance with face matching tasks in the peripheral than hearing people do. We’re still trying to explain that one.”

A period of time between a sight and sound that, when appropriately short, causes the brain to integrate the two

Neuroplasticity isn’t always a boon. Jiang and her group have also been studying what’s known as the temporal binding window, during which sights and sounds are bound together in the brain.

This window is larger in children, but it gets smaller and thereby more accurate with age – that is until it starts to widen again in the elderly. “If you have a larger binding window, you have a less realistic interpretation of the world,” Jiang said. “There’s one study that suggests this contributes to increased risk of falling in elderly people.”

Her team is currently conducting research on shortening this window and how possible recalibration is in elderly populations. Across these and other niche populations, researchers like Jiang continue to find that the brain is more malleable than conventional wisdom suggests.

Written all over your face

There’s a reason that so many people hate Comic Sans, the doofy Labrador of fonts. It’s the same reason that Times New Roman looks stately and formal, and Helvetica, in its austere utility, shows up all over the place. Fonts, words, even individual letters and numbers, they can all take on a bit more personality than one might expect. Would the classic riddle ‘why was six afraid of seven?’ be as ubiquitous on popsicle sticks if seven didn’t look just sinister enough to have eaten nine? Maybe not.

This seemingly harmless personification of letters and numbers isn’t an isolated phenomenon. That is, it’s not just limited to few typographers with intense relationships to these symbols. It’s in nearly everyone. Assistant Professor Lars Strother and his team are getting to the bottom of this little quirk, and the implications range from the very evolution of reading to the need to rethink dyslexia and how its understood.

In Strother’s lab, doctoral students Zhiheng “Joe” Zhou and Matthew Harrison are both conducting behavioral and neuroimaging experiments. “When we say ‘behavioral experiments’ in my lab, it typically involves people sitting in front of a screen,” Strother said. “They usually have some sort of task. It could be a face recognition task. It could be a word recognition task.”

A typical task might involve being presented with a face twice and having to determine if half of the face changed. “That in and of itself is not very exciting,” said Strother. “What is kind of exciting, is … Joe is focusing more on word recognition, and Matt is focusing on face perception, but there’s this interesting parallel, which is pretty cool.”

Faces and words have more in common than one might expect.

Humans are also spectacular at getting things wrong

Strother uses an illustrative example to pull this idea together.

“Imagine you’re giving a lecture to 3,000 cats.” The cats all look roughly alike in the same way that people’s faces don’t. That is, the brain evolved to differentiate between human faces based on minute differences that simply aren’t perceived in the same way for cat faces. An English speaker seeing Chinese characters for the first time has the same experience. The differences between the characters aren’t inscrutable, but that person’s brain learned to quickly, effortlessly pick out differences in an entirely different alphabet.

“This is the interesting part. You don’t really have to train kids to recognize faces. It’s a natural behavior. Reading isn’t,” Strother said. “It’s a human and cultural invention, and it’s highly complicated.”

“There’s a complementary relationship in terms of hemisphere for faces on the right and words on the left,” said Strother. “The only exception is 20 percent left-handers, for whom the whole thing reverses.”

“This suggests that as a child learns to read the neurons in the left side of the brain that have evolved to process faces begin to rewire themselves to aid in reading. That’s what we’re doing to our kids when we teach them to read - we’re forcing them to undo millions of years of evolution that’s caused neural circuits to … respond to faces,” Strother said.

Abstract ideas that remain unchanged in the mind and can tolerate variability

In terms of perception generally, humans have what are called “ invariant representations.” When an object moves within the field of vision, the shape seen by the eye changes, but it’s still understood to be the same object. This works with faces, too. A familiar person’s face does not become unrecognizable or turn into someone else’s when it’s seen upside down.

Letters behave quite differently.

“If I move a pen around, it’s still a pen, but if I move an ‘f’ around or, say, the letter ‘p,’ it changes its identity. It goes from a ‘p ‘ to a ‘d’ to a ‘b’ and even a ‘q,’” said Strother.

“It’s not arbitrary, but it doesn’t follow the rules of nature.” The invariance of visual stimuli mapped to things in the real world, like the pen Strother mentions, is different – and must be – than the type of invariance found in letters and words mapped to meaning in the language world.

“The neural mapping of letters to words to meaning involves the interplay of visual and non-visual centers of the brain in a way that is unique to written language.”

The goal with reading is so different, in fact, that it has profound implications for how the visual system operates.

As Strother points out, faces and words are very important. “If you can’t see faces: big problem. If you can’t read: really big problem.”

This gets to the meat of what Strother’s group is studying. The argument is that the brain both borrows from and modifies visual and neural mechanisms that evolved to process faces in order to achieve proficiency in reading. This visual system is co-opted, forever altered. One part keeps up the good work it’s been doing with faces; the other learns to read.

Faces in the crowd

From the outside, the brain is symmetrical. The left and right hemispheres have basically the same parts. Much like one’s hands, though, they don’t function the same way. Unlike hands, it’s not that one side is just worse at everything and shouldn’t be trusted to brush teeth. Rather, each side has areas that specialize in different processing tasks. This is known as lateralization.

The tendency for some brain functions to be more dominant in one hemisphere or the other

Not all functions are lateralized. Some tend to favor one hemisphere over the other. Some tasks are split equally between the hemispheres. Until fairly recently, vision was thought by researchers to be one of these, but some physicians had long suspected lateralization within the visual system.

“They took notes on patients that had brain damage to the right or the left, and when there was damage to the left, the most typical symptom was acquired dyslexia,” Strother said. And what did these physicians notice about brain damage to the corresponding area on the right? Their patients struggled with prosopagnosia, more commonly known as face blindness.

Prosopagnosia can be a distressing problem. It’s a bit like living in Strother’s cat lecture example. People with face blindness have trouble picking out the minute differences in faces that seem so obvious to everyone else. Like in the cat example, they go by cues like hair color to try to distinguish between different people, but everyone just kind of looks alike.

Acclaimed writer and neurologist Oliver Sacks wrote about a patient with this disorder in his book “The Man Who Mistook His Wife for a Hat,” which details the progression of the disorder in a music teacher who slowly stopped being able to recognize his students and, as is obvious from the title of the book, didn’t get better from there. It’s easy to divorce this disorder from intelligence and seen as simply a visual processing error, not a sign of mental handicap. Its cousin, dyslexia, is not so lucky.

Symmetry and dyslexia

“Because words lack symmetry, the brain must be able to ignore it to process them,” Strother said. Studies in which methods have been used to “switch off” these neurons wired to ignore symmetry reveal subjects have difficulty making the distinction between letters. Applying the same technique to the corresponding area on the other side of the brain produces no effect on reading ability.

With language processing happening only on one side, words and letters seen from the opposite side must be sent to the correct area for processing, but this isn’t always accurate. “If you get mixed signals between the two, you start mixing up letters,” said Strother. “This happens early in life, age three up to six or seven or even twelve years old.” The information taken in by each eye is being combined using different rules, and when words are run through the facial recognition area, reading becomes exceedingly difficult.

"Dyslexia is not, contrary to folklore, about being an adult and having letters all jumbled. Even when dyslexics believe that, it does not seem to be the case. What is the case is that it occurs for everyone," said Strother. "Everyone struggles with this early on."

This isn’t limited to just ‘p’ and ‘q’ either. Letters and numbers get flipped because children haven’t yet developed this area of the brain. As this flipping is happening, their brains are being retrained to not treat letters and objects in the same way.

The change happens in the high-level vision system, a sort of intermediary step between the eyes and full-on perception. “The original definition of dyslexia is ‘a problem of word recognition in the absence of sensory deficits, specifically visual deficits,’ but at the time, there wasn’t good understanding of high-level vision,” said Strother. The work being done by Strother and his group highlights the need to update this definition and place more emphasis on the visual processing system with the hope of not only getting a better understanding of dyslexia but to help eliminate the stigma that comes along with it.

The implications of these findings aren’t just important for the future of dyslexia or for giving explanations for why certain fonts appear to be stretching or sad or giddily delivering messages. If early humans weren’t so social, if recognizing family members, their gestures and other social behaviors hadn’t been so important all those millennia ago, “if that stuff wasn’t in place,” said Strother, “it’s very possible that we might never have evolved a capacity for written language at all.”

Et tu, RNA?

Deoxyribonucleic acid, or DNA, is like the instruction booklet for building and maintaining a human body. “It’s how that booklet is read that makes a skin cell a skin cell or a brain cell a brain cell,” said Assistant Professor Pedro Miura. “I study that reading process.”

Miura’s research is concerned with ribonucleic acid, or RNA, but not common forms. Most RNAs are involved in the encoding of proteins, the building blocks of the human body. Then there’s what Miura says is sometimes referred to as “the dark matter of the genome.” In the last ten years, a host of different RNAs that don’t seem to encode protein have been discovered, and no one is quite sure about what they do. Miura’s group focuses on one of these types of RNA that stands out from all the others: circular RNAs.

These mysterious nucleic acids form as circles rather than RNA’s typical squiggly line. In his postdoctoral work, Miura and his colleagues were the first to show that these RNA are actually increasing as fruit flies age – and that they are a lot more of them in the brain, where they are highly expressed. This got them thinking. “Nothing that happens when you age is really good for you,” said Miura, “except for, you know, knowledge and experience. Everything else, physically, is bad.” It stands to reason, then, that these RNA might not be the greatest thing to have piling up in the aging brain. “We came up with a simple hypothesis: this age-associated increase in circRNAs, it must be bad.”

Fruit flies used in Miura’s research

Drosophila melanogaster aren’t humans. Whatever interesting findings Miura and his group could produce might not be relevant outside of these tiny insects, so they set about hunting down other examples of this peculiar form of gene expression. “Because this is brain tissue and you can’t just start chopping up human brains, we did this in mice first, as a follow-up to the drosophila study,” said Miura. “Using what’s called ‘next-generation sequencing,’ we were able to find that this phenomena holds.” After mice, they went to worms and found the same thing.

“Across phyla, across different types of species, this thing happens,” said Miura. Their goal now is understanding: why do these cricRNAs increase in expression with age, and what mechanisms drive that? Are they actually bad? Will reducing them increase lifespan? There’s no shortage of research to be done. Recent studies have shown connections between circRNA and cancer. “No one has really found their crucial role in the brain or linked them to disease, but that’s where the evidence is pointing based on where they are.”

“What enzymes target circRNA – that’s the next important question,” said Miura. He and his group have found that the main reason these RNAs collect with age is that nothing in the brain breaks them down. Waste RNA is usually taken care of by enzymes called exonucleases, and these enzymes like to be able to start their jobs at one end of the strand of RNA or the other. Circles don’t have ends. “They just stick around,” says Miura. “Transcription is going to make more and more of them. The linear RNAs … get chopped up over time. CircRNAs get made, and they stick around as animals get older.”

This kind of research might seem abstract, but studying these basic processes and attempting to figure out what’s going on in and between cells in the brain helps researchers make smart and informed decisions about how to tackle diseases. Miura doesn’t yet know if the circRNA are Parkinson’s Disease-related or Alzheimer’s Disease-related. “I’m just studying the fundamental process,” he said. “These things are made; what do they do? It could be nothing.” If this unique type of RNA does turn out be guilty of causing disease or neurodegeneration with age, it opens up whole new avenues of treatment and diagnosis.

Mind-blowing progress

Miura and his group could not have gotten as far as they have without the help of other researchers around campus. This kind of research does not happen in isolation. Miura has worked with experts across the University – from the “worm guy,” Associate Professor of Biology Alexander van der Linden, to University of Nevada, Reno School of Medicine Assistant Professor Robert Renden, who studies mammalian brains, among other things. He also credits the hard working and diverse group of University undergraduates, graduate students and postdoctoral fellows in his laboratory.

“I would never have been able to do this stuff without these collaborations. I think that’s kind of a unique thing we have: putting people with different expertise together. It leads to synergistic discoveries that, on your own, you couldn’t have made,” said Miura. “It’s really this community of neuroscientists that makes these things possible.”

When a neuron is born, it looks a lot like any other cell in the body, but it quickly begins to differentiate itself when an outgrowth, called an axon, begins to form. These axons stretch long distances through the body using chemical signals like signposts to find their ultimate destinations. In humans, a motor neuron stretching from the spinal cord to enervate muscles in the limbs can easily be a meter long. It just so happens that certain types of non-protein-encoding RNA work as part of this guidance system – and Miura loves studying RNA outliers.

Working with Associate Professor Tom Kidd and Professor Grant Mastick, Miura realized that their groups were looking at the same genes. Using a new technology known as CRISPR, the researchers have been editing out the genes that produce these RNAs and finding that doing so results in neurological defects in mice and fruit flies. Essentially, the axons can’t find their destinations without these RNAs, which is something that simply could not have been found before this technology became available.

“It’s kind of like a whole new field was born four years ago because we got a better machine to see what’s inside of the cell,” said Miura. “ CRISPR has huge ethical and bioethical implications, but right now, for research, it’s transforming what we can do. It’s a huge advance. The way I think of it with CRISPR is: We have this huge telescope – that’s next-generation sequencing – but instead of seeing things far away, we’re seeing very deeply into the cell’s nucleus and seeing, instead of planets and stars, all the different RNA molecules. Discovering these RNAs has been awesome, but to find out what they do, we’ve got to start getting rid of them one by one, and doing that in a rapid fashion in animals wasn’t possible before CRISPR, a four-year-old technology.”

In spite of these tremendous advances, it’s still not easy to study the functions of circRNAs because they come from the same genes that make the more common, protein-encoding mRNAs. With these other RNA types missing, there’s no easy way to isolate the effects of the circRNAs or their absence – at least not yet. That’s another puzzle being worked on.

In a field progressing as rapidly as neuroscience, there are many, many unanswered questions. As Miura puts it, “A lot of progress in neuroscience and other biological research is: we have a new tool that allows us to see things we couldn’t, things we didn’t even know were going on before. Innovation in science - part of it is bright ideas, but a lot of it is getting a better microscope or sequencing tool. In the history of science, … most of these advances are technology-based.”

And technology is booming at this particular point in history. Because of this, there's a special light in the eye of every neuroscience researcher. Each one is right at the beginning of things, and there is so much more to explore. "That's a nice way to say we don't know what we're doing," Miura said laughing.

Epilogue

The neuroscience program at the University of Nevada, Reno is still in its infancy, much like the field itself. The interdisciplinary undergraduate program spans two colleges, was the first of its kind at the University and is in its ninth year. The Ph.D. program only started in 2015. Today, more than 100 professors across campus and across disciplines contribute to the ever-growing program.

Co-director of the program Foundation Professor Mike Webster recalls its beginnings fondly. “The University was already poised; we already had a lot of people and the interest in place. All of this has really just been connecting groups that were already here,” he said. “The University and the administration have been incredibly supportive of that growth.” Webster himself has brought in over $20 million in National Institutes of Health funding to create the Center for Neuroscience, which directly supports much of the groundbreaking research taking place at the University.

Without grants from the NIH’s Centers of Biomedical Research Excellence, none of this would be possible. Researchers wouldn’t have access to neuroimaging and molecular imaging tools. The University wouldn’t be partnering with Renown Health to bring another fMRI machine to the area, ensuring access for the next ten years. There wouldn’t be a new Institute for Neuroscience in the works. There wouldn’t be eight new neuroscience faculty joining the University or eager students in the undergraduate and graduate programs to support researchers. In short, the program wouldn’t be more than a pipedream, and the University’s groundbreaking researchers would have taken their efforts elsewhere.

There is evidence of the excitement this program generates in every corner of campus. In the music department, Assistant Professor Jean-Paul Perotte is employing a special headset to turn brain waves into live musical performances. Over in biology, Professor Vladimir Pravosudov and his group study the hippocampi of mountain chickadees in the wild, notable because these non-migratory birds cache up to 500,000 food items to survive harsh winters – and remember where they put all of them.

Associate Professor Marian Berryhill is studying head trauma, and she's found that concussions are even more detrimental than previously assumed. Her group was shocked to find that they couldn't design a test simple enough to eliminate differences between subjects who had relatively recently suffered concussions and her control group. She believes helmets are going to be en vogue accessories when this research gets more attention.

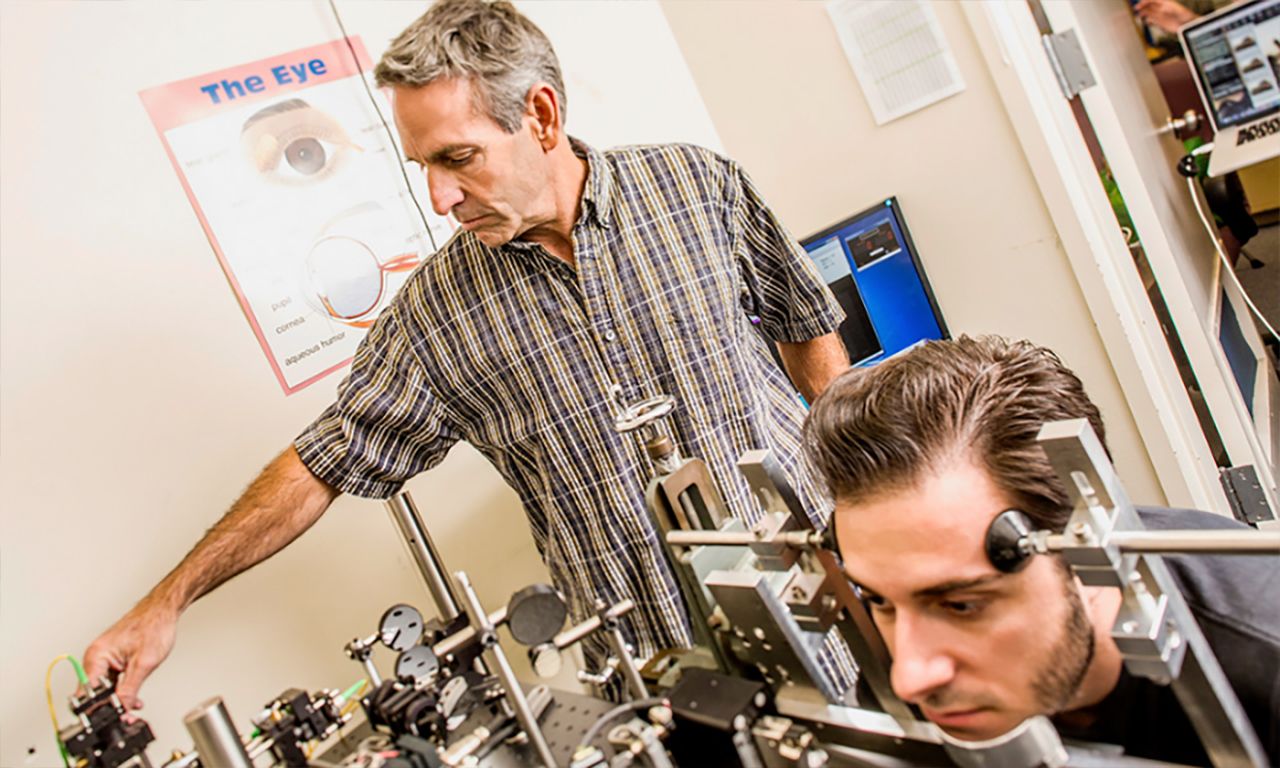

Department of Psychology Chair Mike Crognale uses advanced optics to look directly into the eye and can actually observe and study individual cells reacting to light in real time. Associate Professor Gideon Caplovitz, whose team has taken home several awards for best optical illusion over the years, lectures art students about how neuroscience influences their work and art history.

“The thing about neuroscience is that there is no area that can’t contribute to it because there is no area that it doesn’t contribute to,” Webster said. Even as neuroscience research broadens its myriad impacts, there’s still a lot to be learned at the most basic levels.

“The brain is so, so complex. Even studying simple organisms like a worm, which has 302 neurons, we don’t know how that works,” said Miura. “We know exactly where each one is. We know exactly how they’re connected. We still don’t know how it works. It’s amazing.” When it comes to research, “there’s no end in sight as far as neuroscience is concerned.”