Assistant professor David Feil-Seifer of the computer science and engineering department at the University of Nevada, Reno believes behavioral tics are essential to standard human-to-human interaction to help ensure that humans are both engaged with was the other is saying or doing in a socially appropriate way. However, he wonders if, by abstracting everything about human-to-human interactions, and applying the situation to robot-to-human interactions, can we learn to make a robot be engaged in a socially appropriate way?

{{RelatedPrograms}}

"There's a great study that was done about 10 years ago where researchers at a hospital in England watched a robot that was being put on two floors and made to interact with the nurses by doing very simple tasks like picking stuff up and dropping it off at other places," Feil-Seifer said. "On the postpartum wing, the nurses loved the robot. They gave it nicknames, they worked their schedule around it and they treated it like it was part of the team. The internal medicine wing, however, hated the robot. They gave it far less polite nicknames, and when people weren't watching they would try to lock it in a closet. Nothing had changed other than the different types of medicine being practiced and the different types of medical culture that come with that.

"Anyway, what happened was, when the robot got in their way, even though the staff knew it was just the program and that it wasn't behaving badly intentionally, on a more reptilian level, they felt like the robot was purposefully trying to make them angry. So, with the NSF grant, we're really thinking about how to sort out this kind of social robotics problem."

Feil-Seifer is referring to the Bilge Mutlu and Jodi Forlizzi's 2008 study "Robotics in Organizations: The Role of Workflow, Social, and Environment Factors in Human-Robot Interaction." This study, in part, is what helped spark Feil-Seifer's own $500,000 National Science Foundation-funded three-year grant, awarded this fall, in which he will be creating models to develop socially aware robotic navigation.

"The problem I'm interested in is how to make robots autonomously be able to act appropriately in what's called a social navigation setting," Feil-Seifer said. "So we're looking at how a robot should be standing or moving when it's in a hallway, or in a tight space, or moving through a large group of people. Any given social situation, essentially."

This first year of Feil-Seifer's social navigation grant will consist of collecting data to help construct models of natural human movement. The collected data will be contributed by the University's very own student body as they make their way around the Scrugham Engineering and Mines building on campus.

"We have ankle-level laser sensors that we're setting up in groups around the hallways [of SEM]," Feil-Seifer said. "What we'll be doing is figuring out how people move in a natural, social setting."

The sensors aren't collecting identifiable information. Rather, the lasers can find where legs are by looking for cylindrical objects, in this case pant legs (shorts and skirts are unable to be detected). The sensors then group the objects together in pairs and scan them as leg movement. Once enough movement data is collected, Feil-Seifer and his team will begin to build the movement models that will help guide their robot.

"We're going to look at how people move over time, and then use that to figure out how people are moving and name that movement, so to speak," Feil-Seifer said. "We'll have people passing in the hallway, people meeting each other, people walking away from each other, people moving around obstacles - just building up our movement library."

Putting the Robotic Movement Models to Use

Though Feil-Seifer and his team are still in the early stages of collecting data, how they plan to put their movement models to use is well into theoretical and practical development.

"What we want to have is a lot of interaction and navigation information that is good and bad," Feil-Seifer said. "Everything from that awkward back-and-forth dance you do with people in the hallway, to just slowing down to avoid bumping into someone - we want to be able to say ‘here's good behavior, so plan to copy that, and here's bad behavior, so plan to stay far away from that.'"

The data Feil-Seifer collects, and the movement models that are created using the information, is what he anticipates will lead to the success of the robot's choices, both by keeping it moving swiftly, and allowing it to obtain a level of social-awareness distinctive to human navigation.

"There are two things that happen when we want the robot to move," Feil-Seifer said. "If we have a map of the environment, we know where we can and can't go, so we plan a path that basically figures out the shortest way to the goal that's possible within the environment. And then, as the robot starts to move along the path, we have to deal with the fact that there may be an obstacle in our way, and we have to think of a way to get around that."

Ultimately, how the robot reacts to any obstacles in its way is where Feil-Seifer's movement models will come into play. Once the robot encounters an obstacle, it will generate a number of possible trajectories based on its forward velocity and turning velocity, all within a widening semi-circle. The robot must then choose the correct trajectory that isn't too wide, resulting in a collision, and doesn't deviate greatly from its planned path, resulting in unwanted or unnecessary movements.

"Along with looking at how close the robot gets to its goal, and how it keeps itself on its path, we're also looking at the social appropriateness of its movements around obstacles, especially when that obstacle is a person," Feil-Seifer said. "The robot needs to compute 60-to-100 sample trajectories in a 10th of a second - so between 600 and 1,000 computations a second - in order to make sure the movement is avoiding the obstacle and maintaining social appropriateness by staying on the right side of the hallway, not getting too close to the wall, and not hindering the movement of the obstacle if that obstacle happens to be a person."

The University's Robotics Lab

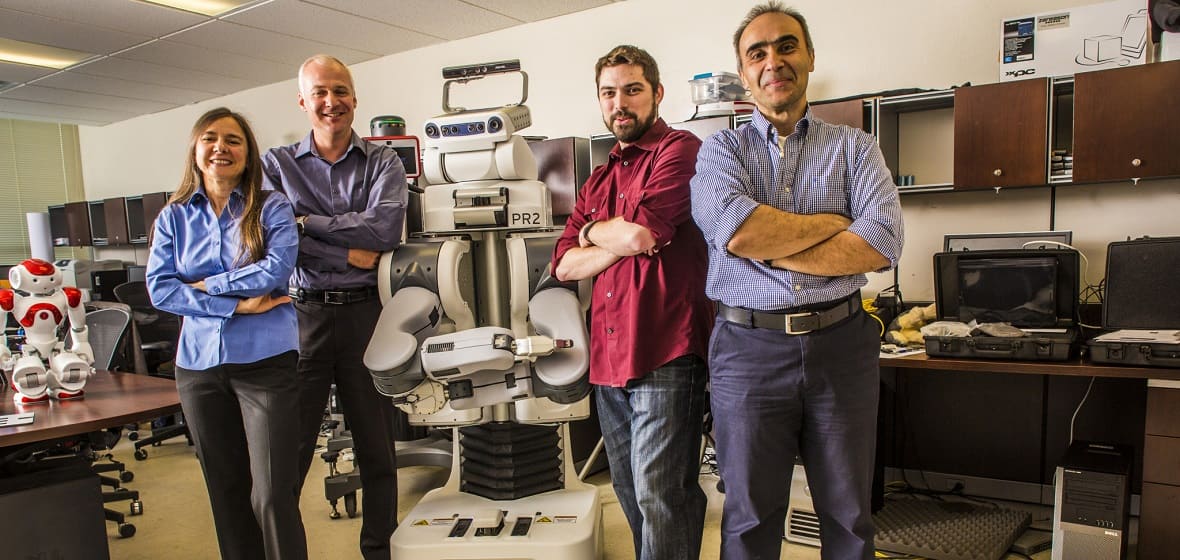

The main robot Feil-Seifer will use throughout the research is the PR2 from the robotics developer Willow Garage.

The University's PR2 robot

"One of the reasons the University is an attractive place to pursue this experiment is because we have some robots that even other top-flight robotics programs don't have," Feil-Seifer said. "We were able to demonstrate the capacity to do proper investigations into this area of robotics."

The PR2's humanoid features help give Feil-Seifer the best outcome for the human-experience-heavy aspects of the research. The robot's head contains two stereovision-optics, which allow it see objects in front of it, and bolted to the crown of its head is a Microsoft Kinect camera that give the PR2 the ability to pick out objects within a scene. Feil-Seifer has found, however, that the PR2's mobility will be its most valuable feature.

"What's really interesting about this robot, and what sets it apart from other robots we have in the lab, is that it can translate any direction," Feil-Seifer said. "It can move side-to-side or diagonally, and at any point, we can have it move in any direction very quickly while maintaining a steady orientation which will be very helpful when it comes to studying socially appropriate behavior."

Overall, Feil-Seifer, whose dissertation work also looked into robotic social navigation, is excited to take his research outside of specialized environments.

"The prototype that was part of my dissertation work only worked within an instrumented room," Feil-Seifer said. "You needed to have all kinds of sensors set up, you needed to have a camera, and you needed to wear a special kind of shirt so that the robot could see you. That all worked well enough, but what we're pursuing right now is to create a robot that can navigate in many different social settings and maintain social appropriateness."